We offer multiple courses at the University of Freiburg which are listed below. Besides the University, we offer a free massive open online course (MOOC) on AutoML. For more information please visit the course website.

Course content for the upcoming semester is actively being developed. While we're working to provide you with the most up-to-date materials, please note that topics, assignments, and schedules may be adjusted before the start of the term. We appreciate your understanding as we refine the curriculum to best support your learning experience.

The field of tabular data has recently been exploding with advances through foundation models. In this seminar, we want to dive deep into these very recent advances to understand tabular foundation models!

| Course type: | Seminar |

| Time | - Kick-off Session: October 17th 10-11 (Zoom ) - Presentation Session 1: Nov 13th 4:00-5:30 - Presentation Session 2: Nov 20th 4:00-5:30 - Presentation Session 3: Dec 11th 4:00-5:30 - Presentation Session 4: Dec 18th 4:00-5:30 - Presentation Session 5: Jan 8th 4:00-5:30 - Presentation Session 6: Jan 15th 4:00-5:30 |

| Location | In-Person; Seminar Room of the Robot Learning Lab (Building 80) |

| Organizers | Tom Zehle , Omar Swelam , Liam Shi Bin Hoo, Steven Adriaensen , and Frank Hutter |

| Registration | OPEN (max. 12 participants) - IMPORTANT: See notes on registration below! |

| Language | English |

Prerequisites

We require that you have taken lectures on or are familiar with the following:

- Machine Learning

- Deep Learning

Notes on registration

To be eligible, you must have participated in the 17.10 information session and fill out the form we shared by Monday, October 20th . Spots in this seminar are allocated using the usual lottery system, to enter this system, you must register for the seminar in HISinOne and assign it the priority "Preferred" by Monday, October 20th , you will be notified on Thursday, October 23rd.

Important correction: In the kick-off session, we mistakenly announced the deadline for registration as October 23rd. The actual deadline however is Monday, October 20th.

Please make sure you have filled out the form and completed your HISinOne registration by Monday.

More info about the lottery :

- timeline: https://www.tf.uni-freiburg.de/en/studies-and-teaching/calendar-dates?set_language=en ("Booking deadlines and seat allocation for Bachelor and Master courses")

Organization

- 12 Students

- Presentations in pairs

Grading

- Presentation (100%)

Papers - TBA

Course type: Lecture + Exercise Time: Lecture: Tuesday, 14:15 - 15:45; Optional exercises: Friday, 10:15 - 11:45 Location: The course will be in-person.

- Weekly flipped classroom sessions will be held on Tuesday in HS 00 026 (G.-Köhler-Allee 101)

- Optional exercise sessions will take place on Friday in HS 00 006 (G.-Köhler-Allee 082) Organizers: Steven Adriaensen , Abhinav Valada , Mahmoud Safari Web page: ILIAS - available starting 8am, 14.10.24 (please make sure to also register for all elements of this course module in HISinOne)

Foundations of Deep Learning

Deep learning is one of the fastest growing and most exciting fields. This course will provide you with a clear understanding of the fundamentals of deep learning including the foundations to neural network architectures and learning techniques, and everything in between.

Course Overview

The course will be taught in English and will follow a flipped classroom approach.

Every week there will be:

- a video lecture

- an exercise sheet

- a flipped classroom session (Tuesdays, 14:15 - 15:45)

- an attendance optional exercise session (Fridays, 10:15 - 11:45)

At the end, there will be a written exam (likely an ILIAS test).

Exercises must be completed in groups and must be submitted 2 weeks (+ 1 day) after their release.

Your submissions will be graded and you will receive weekly feedback.

Your final grade will be solely based on a written examination, however, a passing grade for the exercises is a prerequisite for passing the course.

Course Material: All material will be made available in ILIAS and course participation will not require in-person presence. That being said, we offer ample opportunity for direct interaction with the professors during live Q & A sessions and with our tutors during weekly attendance optional in-class exercise sessions.

Exam: The exam will likely be a test you complete on ILIAS. In-person presence will be required .

Course Schedule

The following are the dates for the flipped classroom sessions (tentative, subject to change):

14.10.25- Kickoff: Info Course Organisation

21.10.25 - Week 1: Intro to Deep Learning

28.10.25 - Week 2: From Logistic Regression to MLPs

8.11.25 - Week 3: Backpropagation

11.11.25 - Week 4: Optimization

18.11.25 - Week 5: Regularization

25.11.25 - Week 6: Convolutional Neural Networks (CNNs)

02.12.25 - Week 7: Recurrent Neural Networks (RNNs)

9.12.25 - Week 8: Attention & Transformers

16.12.25 - Week 9: Practical Methodology

13.01.26 - Week 10: Auto - Encoders, Variational Auto - Encoders, GANs

20.01.26 - Week 11: Uncertainty in Deep Learning

27.01.26 - Week 12: AutoML for DL

03.02.26 - Round - up / Exam Q & A

In the first session (on 14.10.24) you will get additional information about the course and get the opportunity to ask general questions. While there is no need to prepare for this first session, we encourage you to already think about forming teams.

The last flipped classroom session will be held on 03.02.26.

Questions?

If you have a question, please post it in the ILIAS forum (so everyone can benefit from the answer).

Course type: B.Sc./M.Sc. projects and theses Web page: See Student page for details on open projects and theses

Course type: Oberseminar: Reading Group (no ECTS) -- Deep Learning and Hyperparameter Optimization Time: Wednesdays, 15:00 Location: online Contact: Julien Siems

Welcome to the Deep Learning Lab a joint teaching effort of the Robotics (R) , Robot Learning (RL) , Neurorobotics (NR) , Computer Vision (CV) , and Machine Learning (ML) Labs. For more details check the following link: https://rl.uni-freiburg.de/teaching/ss25/dl-lab/

The field of tabular data has recently been exploding with advances through foundation models. In this seminar, we want to dive deep into these very recent advances to understand tabular foundation models!

| Course type: | Seminar |

| Time | - Kick-off Session: April 25th 10-12 (Zoom ) - Presentation Session 1: May 13th 10-12 - Presentation Session 2: May 20th 10-12 - Presentation Session 3: May 27th 10-12 - Presentation Session 4: June 3rd 10-12 - Presentation Session 5: June 17th 10-12 - Add-On Poster Session: August 5th 10-12 |

| Location | In-Person; 82-0-06 Kino |

| Organizers | Jake Robertson and Lennart Purucker and Steven Adriaensen and Frank Hutter |

| Registration | CLOSED |

| Language | English |

Prerequisites

We require that you have taken lectures on or are familiar with the following:

- Machine Learning

- Deep Learning

Organization

- 20 Students

- Presentations in pairs

- Add-on of your choice

Grading

- Presentation (70%)

- Add-On of your choice (30%)

Papers

- AVICI: https://arxiv.org/abs/2205.12934

- TabPFNv1: https://arxiv.org/abs/2207.01848

__________________ - CARTE: https://arxiv.org/abs/2402.16785

- TabuLa: https://arxiv.org/abs/2310.12746

__________________ - GAMFormer: https://arxiv.org/abs/2410.04560

- Mothernet: https://arxiv.org/abs/2312.08598

__________________ - Drift-Reslient TabPFN: https://arxiv.org/abs/2411.10634

- FairPFN: https://arxiv.org/pdf/2407.05732

__________________ - TabDPT: https://arxiv.org/abs/2410.18164

- TabPFNv2: https://www.nature.com/articles/s41586-024-08328-6

Course type: Live Lectures + (optional) Exercises Time: Wednesday 14:00 - 16:00, Friday 14:00 - 16:00 Location: HS 00 026 SCHICK - SAAL (G.-Köhler-Allee 101) Organizers: Joschka Bödecker , Tim Welschehold , Steven Adriaensen Web page: ILIAS

Kickoff: The first lecture will take place on Wednesday 23.04. There is nothing you need to prepare. During this lecture, we will give you an overview of the course content, its organization, and the history of AI. The first exercise sheet will be released on Friday 25.04 and is due for submission before Friday 02.05, 8:00 am (optional, to receive feedback).

Course Content: This course will introduce basic concepts and techniques used within the field of Artificial Intelligence. Among other topics, we will discuss:

- Introduction to and history of Artificial Intelligence

- Agents

- Problem-solving and search

- Board Games

- Logic and knowledge representation

- Planning

- Representation of and reasoning with uncertainty

- Machine learning

- Deep Learning

Lectures will roughly follow the book: Artificial Intelligence a Modern Approach (3rd edition)

Organization : There are two lecture slots every week, on Wednesday and Friday (14:15 - 15:45). During these slots, there will be live (in-person) lectures. These will be in-person only (not hybrid), but recordings will be made available on ILIAS.

Every week, we will also release an exercise sheet (on Friday) to be submitted before next Friday (8:00am). Your submissions will not be graded, but you will receive feedback from our tutors. On Friday, after the live lecture, one of our tutors will present the master solution and answer any questions you may have. Participation in these weekly sessions and exercises is optional. The only requirement for passing the course is passing the final exam (mode: written, in-person, open book) which will take place on 03.09.2025 at 9:00am.

If you have any questions, please post in the ILIAS forum or contact us ailect24@informatik.uni-freiburg.de

Course type: Flipped-classroom Lecture + Exercise Time: Lecture: Tuesday, 14:00 - 16:00

Exercise: Thursday, 14:00 - 16:00 Location: Lecture: HS 00 026 µ - SAAL (G.-Köhler-Allee 101)

Exercise: SR 00 006 (G.-Köhler-Allee 051) Organizers: André Biedenkapp , Neeratyoy Mallik , Alexander Pfefferle , Steven Adriaensen Web pages: HISinOne (Lecture) , HISinOne (Exercises)

⚠️ You have to register for both the lecture and the exercises in HISinOne ⚠️

First Lecture

Date : Tuesday, April 22, 2024, 14:15.

Requirement for attending : The Overview lecture from the course website (password to be sent via HISinOne email and first in-person lecture).

Background

Applying machine learning (ML) and in particular deep learning (DL) in practice is a challenging task and requires a lot of expertise. Among other things, the success of ML/DL applications depends on many design decisions, including an appropriate preprocessing of the data, choosing a well-performing machine learning algorithm, and tuning its hyperparameters, giving rise to a complex pipeline. Unfortunately, even experts need days, weeks, or even months to find well-performing pipelines and can still make mistakes when optimizing their pipelines. The course will discuss meta-algorithmic approaches to automatically search for, and obtain well-performing machine learning systems using automated machine learning (AutoML). Such AutoML systems allow for faster development of new ML/DL applications, require far less expert knowledge than doing everything from scratch and often even outperform human developers. In this lecture, you will learn how to use such AutoML systems, develop your own systems, and understand ideas behind state-of-the-art AutoML approaches.

Requirements

We strongly recommend that you know the foundations of

- machine learning (ML)

- and deep learning (DL)

We further recommend that you have hands-on experience with:

- Python (3.10+)

- machine learning (scikit-learn)

- deep learning (PyTorch)

The participants should have attended at least one other course for ML and DL in the past.

Topics

The lectures are partitioned into several parts, including:

- Hyperparameter Optimization

- Bayesian Optimization for Hyperparameter Optimization

- Neural Architecture Search

- Dynamic Configuration

- Analysis and Interpretability of AutoML

- Algorithm Selection/Meta-Learning

Organization

The course will be taught in a flipped-classroom style. We will meet weekly once for a lecture, and once weekly (optionally) for exercise sessions.

Every week, there will be a new exercise sheet. Most exercises will be practical, and involve programming in Python and teamwork (teams of up to 3 students!) so that you learn how to apply AutoML in practice.

Examination

The course examination will be project-based, allowing students to work in groups to solve an AutoML problem practically. The final assessment will take place as a conference-style poster session where all groups will present their projects. This format provides an opportunity to demonstrate both technical implementation skills and the ability to communicate complex AutoML concepts effectively.

Lecture: Tuesday , 14 - 16

Exercise sessions [optional] (hybrid format) : Thursdays , 14-16

Exercise submission deadline [strict] (via Github) : TBA

Course material : The links to access the GitHub classroom repositories, videos with subtitles, and extra lecture materials will be made available on this page . (The password to that page will be announced in the first session and via HISinOne email).

The course will be taught in English.

MOOC content: The material is publicly available via the AI-Campus platform . Please register on AI-Campus to access the materials. Grading of the exercises will be done via GitHub classroom.

The lecture materials are open-sourced via https://github.com/automl-edu/AutoMLLecture .

Large language models (LLMs) exhibit remarkable reasoning abilities, allowing them to generalize across a wide range of downstream tasks, such as commonsense reasoning or instruction following. However, as LLMs scale, inference costs become increasingly prohibitive, accumulating significantly over their life cycle. In this seminar we will dive into methods like quantization, pruning and knowledge distillation to optimize LLM inference. Please fill this interest form to participate in the seminar.

| Course type: | Seminar |

| Time | Five slots, to be determined with all participants. Kick-off is likely on the 24th of October from 2-3pm |

| Location | in-person; SR 04-007, building 106 |

| Organizers | Rhea Sukthanker , Arbër Zela , Mahmoud Safari |

| Registration | Via HISinOne (maximum nine students, registration opens 14th of October) |

| Language | English |

Prerequisite

We require that you have taken lectures on or are familiar with the following:

- Machine Learning

- Deep Learning

- Automated Machine Learning

Organization

After the kick-off meeting, everyone is assigned a paper (one or two depending on the content). Then, everyone understands the paper(s) assigned to them and prepares two presentations.

- The first presentation will focus on establishing, the background, motivation for the work and a concise overview of the approach proposed in the paper

- The second presentation will focus on the details of the approach, the results and takeaways from the paper and an “add-on” described below

Students will contribute an "add-on" related to the paper for the final report. This includes but is not limited to a thorough literature review, reproducing some experiments, profiling inference latency of the LLMs, implementing a part of the paper or providing a colab demo on applying the method in the paper to a different LLM. Students can (e-)meet with Rhea Sukthanker for feedback and any questions (e.g., to discuss a potential "add-on").

Grading

- Presentations: 50% (two times 25min + 15min Q&A)

- Report: 30% (4 pages in AutoML Conf format, due one week after last end term)

- Add-on: 20%

List of Potential Papers

- Are Sixteen Heads Really Better than One? https://arxiv.org/pdf/1905.10650.pdf

- FLIQS: One-Shot Mixed-Precision Floating-Point and Integer Quantization Search https://arxiv.org/abs/2308.03290

- Minitron https://www.arxiv.org/abs/2407.14679

- MiniLLM https://openreview.net/pdf?id=5h0qf7IBZZ

- Compressing LLMs: The Truth is Rarely Pure and Never Simple https://arxiv.org/abs/2310.01382

- Wanda : https://arxiv.org/pdf/2306.11695

- SparseGPT: https://arxiv.org/abs/2301.00774

- On the Effect of Dropping Layers of Pre-trained Transformer Models https://arxiv.org/pdf/2004.03844.pdf

- Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy Lifting, the Rest Can Be Pruned https://arxiv.org/pdf/1905.09418.pdf

- A Fast Post-Training Pruning Framework for Transformers https://proceedings.neurips.cc/paper_files/paper/2022/file/987bed997ab668f91c822a09bce3ea12-Paper-Conference.pdf

- LLM-Pruner: On the Structural Pruning of Large Language Models https://arxiv.org/pdf/2305.11627.pdf

- Compresso https://arxiv.org/pdf/2310.05015.pdf

- LLM Surgeon https://arxiv.org/pdf/2312.17244.pdf

- Shortened Llama https://arxiv.org/abs/2402.02834

- SliceGPT https://arxiv.org/abs/2401.15024

- Structural pruning of large language models via neural architecture search https://arxiv.org/abs/2405.02267

- Not all Layers of LLMs are Necessary during Inference https://arxiv.org/pdf/2403.02181.pdf

- ShortGPT: Layers in Large Language Models are More Redundant Than You Expect https://arxiv.org/abs/2403.03853

- Shortened Llama https://arxiv.org/abs/2402.02834

- FLAP: Fluctuation-based adaptive structured pruning for large language models https://arxiv.org/abs/2312.11983

- Bonsai: Everybody Prune Now: Structured Pruning of LLMs with only Forward Passes https://arxiv.org/pdf/2402.05406.pdf

- The Unreasonable Ineffectiveness of the Deeper Layers https://arxiv.org/pdf/2403.17887v1.pdf

- Sheared Llama https://arxiv.org/abs/2310.06694

- Netprune https://arxiv.org/pdf/2402.09773.pdf

- MiniLLM: Knowledge Distillation of Large Language Models https://arxiv.org/pdf/2306.08543

- A Surve...

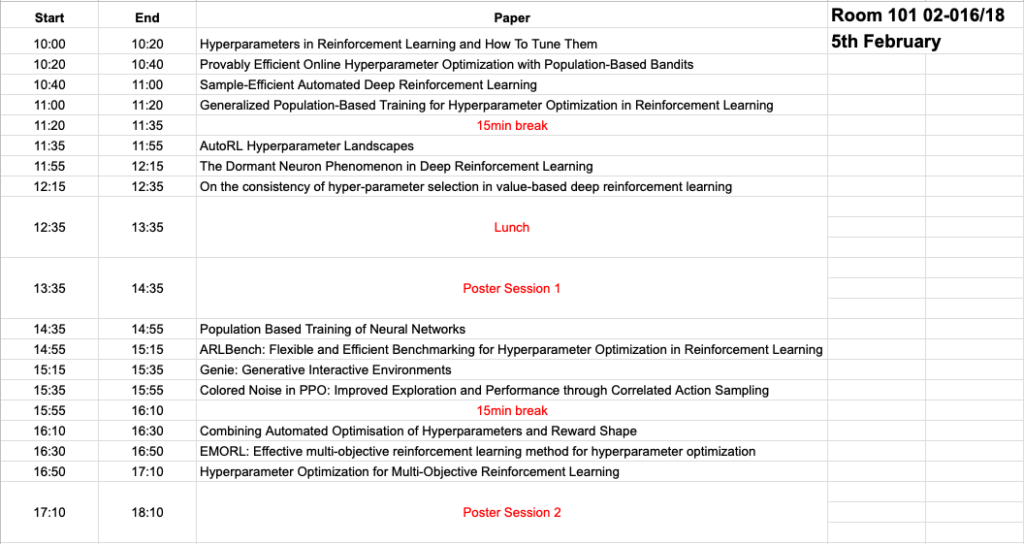

| Course type: | Block Seminar |

| Time: | Kickoff Session: 17.10.24 14:00 - 16:00 Presentation Sessions: 05.02.24 10:00 - 18:00 |

| Location: | Kickoff Session: SR 02-016/18 (G.-Köhler-Allee 101) Presentation Sessions: SR 02-016/18 (G.-Köhler-Allee 101) |

| Organizers: | André Biedenkapp , Noor Awad , Raghu Rajan , M Asif Hasan , Baohe Zhang |

| Web page: | HISinOne , Local Page |

You can register for the seminar via HISinOne .

Note: To ensure that we can print the posters in time, they have to be submitted by noon of 31.01.25 to Baohe Zhang (https://nr.uni-freiburg.de/people/baohe-zhang)

Background

Hyperparameter optimization (HPO) is a powerful approach to achieve the best performance on many different problems. However, reinforcement learning (RL) offers unique challenges, such that classical HPO and automated machine learning approaches are often not directly applicable. In this seminar, we will discuss the unique challenges posed by the automated reinforcement learning (AutoRL) problem and discuss a variety of solution approaches, ranging from static to dynamic configuration methods. We further explore topics in reinforcement learning, such as curriculum learning, to understand how environment design can impact the learning performance of RL agents.

Requirements

We require that you have taken lectures on

- Reinforcement Learning, and/or

- Machine Learning

We strongly recommend that you have heard lectures on

- Automated Machine Learning

- Reinforcement Learning

Organization

The seminar is intended to be held as a block seminar, with sessions at the end of the semester in which all students will present their papers. All students are required to read the relevant literature to prepare for this session. After each presentation, we will have time for a question & discussion round and all participants are expected to take part in these. Each student has to write a short paper about their assigned topic which is to be handed in one week prior to their presentation.

During the semester, the students are expected to meet once with their assigned supervisor to discuss their paper and clarify open questions. Before this meeting however, students are required to meet with a "study buddy" whose role it is to be a discussion partner to clarify the first set of questions, and give feedback for the presentation.

Grading

- Presentation: 60% (20min + 10min Q&A)

- Paper: 20% (4 pages in AutoML Conf format , due one week prior to your presentation)

- Participation in Discussions: 20%

Literature

Relevant literature can be found at https://autorl.org/ and https://autorlworkshop.github.io/ . This list contains many recent papers and blog posts in the scope, though not all of the papers that we intend to cover in the seminar. For a general overview on the topic please refer to the survey on AutoRL (https://jair.org/index.php/jair/article/view/13596 ).

Kick-Off Slides

https://docs.google.com/presentation/d/1jt-RDAZfqnPqKS5YXyuRWuqgvX3wd-kHYnIZf8uylwU/edit?usp=sharing (best viewed in presentation mode due to overlapping animations)

Paper Pool

https://docs.google.com/document/d/1Dp7edapWlOFm7Irihgx8pLJSBNwfGa3nCkspeFVTAcA/edit?usp=sharing

The field of tabular das has recently been exploding with advances through large language models (LLMs), deep learning algorithms, and foundation models. In this seminar, we want to dive deep into these very recent advances to understand them.

| Course type: | Seminar |

| Time | Five slots, to be determined with all participants. Kick-off is likely on the 23rd of October at 10 to 11 am. |

| Location | in-person; Meeting Room in our ML Lab |

| Organizers | Lennart Purucker |

| Registration | Via HISinOne (maximal six students, registration opens 14th of October) |

| Language | English |

Prerequisites

We require that you have taken lectures on or are familiar with the following:

- Machine Learning

- Deep Learning

- Automated Machine Learning

Organization

After the kick-off meeting, everyone is assigned a paper about recent advances in deep learning (one or multiple papers, depending on the content). Then, everyone is expected to understand and digest their assigned papers and prepare two presentations. The first presentation is given in midterms (two separate slots), and the second during the endterms (two separate slots).

- The first presentation will focus on the relationship between the papers, any relevant related work, any background to understand the paper, and the greater context of the work.

- The second presentation will focus on the paper's contributions, describing them in detail.

In addition to the second presentation, students are expected to contribute an "add-on" related to the paper for the final report. This includes but is not limited to reproducing some experiments, implementing a part of the paper, providing a greater literature survey, fact-checking citations, experiments, or methodology, building a WebUI or demo for the paper, etc. Students can (e-)meet with Lennart Purucker for feedback and any questions (e.g., to discuss a potential "add-on").

Grading

- Presentations: 40% (two times 20min + 20min Q&A)

- Report: 40% (4 pages in AutoML Conf format, due one week after last end term)

- Add-on: 20% (due with the report)

Short(er) List of Potential Papers / Directions:

LLMs

- https://arxiv.org/abs/2409.03946

- https://arxiv.org/abs/2403.20208

- https://arxiv.org/abs/2404.00401 , https://aclanthology.org/2024.lrec-main.1179/ , https://arxiv.org/abs/2408.09174

- https://arxiv.org/abs/2404.05047

- https://arxiv.org/abs/2404.17136

- https://arxiv.org/abs/2404.18681 , https://arxiv.org/abs/2405.17712 , https://arxiv.org/abs/2406.08527

- https://arxiv.org/abs/2405.01585

- https://arxiv.org/abs/2407.02694

- https://arxiv.org/abs/2408.08841

- https://arxiv.org/abs/2408.11063

- https://arxiv.org/abs/2403.19318

- https://arxiv.org/abs/2403.06644

- https://arxiv.org/abs/2402.17453 , https://arxiv.org/abs/2409.07703

- https://arxiv.org/abs/2403.01841

Deep Learning

- https://arxiv.org/abs/2405.08403

- https://arxiv.org/abs/2307.14338

- https://arxiv.org/abs/2305.06090 , https://arxiv.org/abs/2406.00281

- https://arxiv.org/pdf/2404.17489

- https://arxiv.org/abs/2405.14018 , https://arxiv.org/abs/2406.05216 , https://arxiv.org/abs/2406.17673 , https://arxiv.org/abs/2409.05215 , https://arxiv.org/abs/2406.14841

- https://arxiv.org/abs/2408.06291

- https://arxiv.org/abs/2408.07661

- https://arxiv.org/abs/2409.08806

- https://arxiv.org/abs/2404.00776

Foundation Models / In-Context Learning

Course type: Lecture + Exercise Time: Lecture: Tuesday, 10:15 - 11:45; Optional exercises: Friday, 10:15 - 11:45 Location: The course will be in-person.

- Weekly flipped classroom sessions will be held on Tuesday in HS 00 006 (G.-Köhler-Allee 082)

- Optional exercise sessions will take place on Friday in HS 00 006 (G.-Köhler-Allee 082) Organizers: Steven Adriaensen , Abhinav Valada , Mahmoud Safari , Rhea Sukthanker , Johannes Hog Web page: ILIAS - available starting 8am, 15.10.24 (please make sure to also register for all elements of this course module in HISinOne)

Foundations of Deep Learning

Deep learning is one of the fastest growing and most exciting fields. This course will provide you with a clear understanding of the fundamentals of deep learning including the foundations to neural network architectures and learning techniques, and everything in between.

Course Overview

The course will be taught in English and will follow a flipped classroom approach.

Every week there will be:

- a video lecture

- an exercise sheet

- a flipped classroom session (Tuesdays, 10:15 - 11:45)

- an attendance optional exercise session (Fridays, 10:15 - 11:45)

At the end, there will be a written exam (likely an ILIAS test).

Exercises must be completed in groups and must be submitted 2 weeks (+ 1 day) after their release.

Your submissions will be graded and you will receive weekly feedback.

Your final grade will be solely based on a written examination, however, a passing grade for the exercises is a prerequisite for passing the course.

Course Material: All material will be made available in ILIAS and course participation will not require in-person presence. That being said, we offer ample opportunity for direct interaction with the professors during live Q & A sessions and with our tutors during weekly attendance optional in-class exercise sessions.

Exam: The exam will likely be a test you complete on ILIAS. In-person presence will be required .

Course Schedule

The following are the dates for the flipped classroom sessions (tentative, subject to change):

15.10.24- Kickoff: Info Course Organisation

22.10.24 - Week 1: Intro to Deep Learning

29.10.24 - Week 2: From Logistic Regression to MLPs

5.11.24 - Week 3: Backpropagation

12.11.24 - Week 4: Optimization

19.11.24 - Week 5: Regularization

26.11.24 - Week 6: Convolutional Neural Networks (CNNs)

03.12.24 - Week 7: Recurrent Neural Networks (RNNs)

10.12.24 - Week 8: Attention & Transformers

17.12.24 - Week 9: Practical Methodology

07.01.25 - Week 10: Auto - Encoders, Variational Auto - Encoders, GANs

14.01.25 - Week 11: Uncertainty in Deep Learning

21.01.25 - Week 12: AutoML for DL

28.01.25 - Round - up / Exam Q & A

In the first session (on 15.10.24) you will get additional information about the course and get the opportunity to ask general questions. While there is no need to prepare for this first session, we encourage you to already think about forming teams.

The last flipped classroom session will be held on 28.01.25.

Competition Results

Flower Classification Challenge

This semester, we organized an optional student competition. In this challenge, students were to train a model to perform class prediction on a flower dataset (more info here ).

There were two tracks:

- Fast-track (models with less than 100k parameters)

- Large-track (models with less than 25M parameters)

The winners per track were determined based on the accuracy of the submitted models on a hidden test set.

The Fast-track podium (12 entries):

- 1st place: Jan Sander

- 2nd place: Sepuh Hovhannisyan

- 3rd place: Ole Ossen

The Large-track podium (9 entries):

- 1st place: Devika Panneer Selvam

- 2nd/3rd place : Ali Mert Aydın, Oğuz Kuyucu, Salih Bora Öztürk

- 2nd/3rd place: Ole Ossen

Congratulations!

Questions?

If you have a question, please post it in the ILIAS forum (so everyone can benefit from the answer).

Welcome to the Deep Learning Lab a joint teaching effort of the Robotics (R) , Robot Learning (RL) , Neurorobotics (NR) , Computer Vision (CV) , and Machine Learning (ML) Labs. For more details check the following link: https://rl.uni-freiburg.de/teaching/ss24/dl-lab/

Course type: Flipped-classroom Lecture + Exercise Time: Mondays, 14:00 - 16:00 Location: HS 00 036 SCHICK - SAAL (G.-Köhler-Allee 101) Organizers: Frank Hutter , Heri Rakotoarison , Neeratyoy Mallik , Eddie Bergman , Johannes Hog , Martin Mráz, Steven Adriaensen , Noor Awad , André Biedenkapp Web page: HISinOne

First Lecture

Date : Monday, April 15, 2024, 14:15.

Requirement for attending : The Overview lecture from the course website (password to be sent via HISinOne email and first in-person lecture).

Background

Applying machine learning (ML) and in particular deep learning (DL) in practice is a challenging task and requires a lot of expertise. Among other things, the success of ML/DL applications depends on many design decisions, including an appropriate preprocessing of the data, choosing a well-performing machine learning algorithm, and tuning its hyperparameters, giving rise to a complex pipeline. Unfortunately, even experts need days, weeks, or even months to find well-performing pipelines and can still make mistakes when optimizing their pipelines. The course will discuss meta-algorithmic approaches to automatically search for, and obtain well-performing machine learning systems using automated machine learning (AutoML). Such AutoML systems allow for faster development of new ML/DL applications, require far less expert knowledge than doing everything from scratch and often even outperform human developers. In this lecture, you will learn how to use such AutoML systems, develop your own systems, and understand ideas behind state-of-the-art AutoML approaches.

Requirements

We strongly recommend that you know the foundations of

- machine learning (ML)

- and deep learning (DL)

We further recommend that you have hands-on experience with:

- Python (3.8+)

- machine learning (scikit-learn)

- deep learning (PyTorch)

The participants should have attended at least one other course for ML and DL in the past.

Topics

The lectures are partitioned into several parts, including:

- Hyperparameter Optimization

- Bayesian Optimization for Hyperparameter Optimization

- Neural Architecture Search

- Dynamic Configuration

- Analysis and Interpretability of AutoML

- Algorithm Selection/Meta-Learning

Organization

The course will be taught in a flipped-classroom style. We will meet weekly once for a lecture, and once weekly (optionally) for exercise sessions.

Every week, there will be a new exercise sheet. Most exercises will be practical, and involve programming in Python and teamwork (teams of up to 3 students!) so that you learn how to apply AutoML in practice.

Lecture: Mondays , 14 - 16

Exercise sessions [optional] (hybrid format) : Thursdays , 14-16

Exercise submission deadline [strict] (via Github) : Tuesdays, 23:59

Course material : The links to access the GitHub classroom repositories, videos with subtitles, and extra lecture materials will be made available on this page . (The password to that page will be announced in the first session and via HISinOne email).

The course will be taught in English.

MOOC content: The material is publicly available via the AI-Campus platform . Please register on AI-Campus to access the materials. Grading of the exercises will be done via GitHub classroom.

The lecture materials are open-sourced via https://github.com/automl-edu/AutoMLLecture .

Course type: Live Lectures + (optional) Exercises Time: Tuesday 10:00 - 12:00, Friday 10:00 - 12:00 Location: HS 00 036 SCHICK - SAAL (G.-Köhler-Allee 101) Organizers: Joschka Bödecker , Tim Welschehold , Steven Adriaensen Web page: ILIAS

Kickoff: The first lecture will take place on Tuesday 16.04. There is nothing you need to prepare. During this lecture, we will give you an overview of the course content, its organization, and the history of AI. The first exercise sheet will be released on Friday 19.04 and is due for submission before Friday 26.04, 8:00 am (optional, to receive feedback).

Course Content: This course will introduce basic concepts and techniques used within the field of Artificial Intelligence. Among other topics, we will discuss:

- Introduction to and history of Artificial Intelligence

- Agents

- Problem-solving and search

- Board Games

- Logic and knowledge representation

- Planning

- Representation of and reasoning with uncertainty

- Machine learning

- Deep Learning

Lectures will roughly follow the book: Artificial Intelligence a Modern Approach (3rd edition)

Organization : There are two lecture slots every week, on Tuesday and Friday (10:15 - 11:45). During these slots, there will be live (in-person) lectures. These will be in-person only (not hybrid), but recordings will be made available on ILIAS.

Every week, we will also release an exercise sheet (on Friday) to be submitted before next Friday (8:00am). Your submissions will not be graded, but you will receive feedback from our tutors. On Friday, after the live lecture, one of our tutors will present the master solution and answer any questions you may have. Participation in these weekly sessions and exercises is optional. The only requirement for passing the course is passing the final exam (mode: written, in-person, open book) which will take place on 06.09.2024 at 9:00am.

If you have any questions, please post in the ILIAS forum or contact us ailect24@informatik.uni-freiburg.de

Course type: Lecture + Exercise Time: Lecture: Tuesday, 10:15 - 11:45; Optional exercises: Friday, 10:00 - 12:00 Location: The course will be in-person:

- Weekly flipped classroom sessions will be held on Tuesday in the Kinohörsaal (G.-Köhler-Allee 82)

- Optional exercise sessions will take place on Friday in HS 00 006 (G.-Köhler-Allee 082) Organizers: Frank Hutter , Abhinav Valada , André Biedenkapp , Mahmoud Safari , Rhea Sukthanker Web page: ILIAS (please make sure to also register for all elements of this course module in HISinOne: Lecture + Exercise )

Foundations of Deep Learning

Deep learning is one of the fastest growing and most exciting fields. This course will provide you with a clear understanding of the fundamentals of deep learning including the foundations to neural network architectures and learning techniques, and everything in between.

Course Overview

The course will be taught in English and will follow a flipped classroom approach.

Every week there will be:

- a video lecture

- an exercise sheet

- a flipped classroom session (Mondays 14:15 - 15:45)

- an attendance optional exercise session (Fridays)

At the end, there will be a written exam (likely an ILIAS test).

Exercises must be completed in groups and must be submitted a week (+ 1 day) after their release.

Your submissions will be graded and you will receive weekly feedback.

Your final grade will be solely based on a written examination, however, a passing grade for the exercises is a prerequisite for passing the course.

Course Material: All material will be made available in ILIAS and course participation will not require in-person presence. That being said, we offer ample opportunity for direct interaction with the professors during live Q & A sessions and with our tutors during weekly attendance optional in-class exercise sessions.

Exam: The exam will likely be a test you complete on ILIAS. In-person presence may be required (TBA).

Course Schedule

The following are the dates for the flipped classroom sessions:

16.10.23 - Kickoff: Info Course Organisation / Team Formation

23.10.23 - ChatGPT Panel Discussion

30.10.23 - Week 1: Intro to Deep Learning

06.11.23 - Week 2: From Logistic Regression to MLPs

13.11.23 - Week 3: Backpropagation

20.11.23 - Week 4: Optimization

27.11.23 - Week 5: Regularization

04.12.23 - Week 6: Convolutional Neural Networks (CNNs)

11.12.23 - Week 7: Recurrent Neural Networks (RNNs)

18.12.23 - Week 8: Practical Methodology

08.01.24 - Week 9: Attention & Transformers

15.01.24 - Week 10: Auto - Encoders, Variational Auto - Encoders, GANs

22.01.24 - Week 11: Uncertainty in Deep Learning

29.01.24 - Week 12: AutoML for DL

05.02.24 - Round - up / Exam Q & A

In the first session (on 16.10.22) you will get additional information about the course and get the opportunity to ask general questions (and form groups!) While there is no need to prepare for this first session, we encourage you to already think about forming teams.

The last flipped classroom session will be held on 05.02.23.

Competition Results

Flower Classification Challenge

This semester, we organized an optional student competition. In this challenge, students were to train a model to perform class prediction on a flower dataset (more info here ).

There were two tracks:

- Fast-track (models with less than 100k parameters)

- Large-track (models with less than 25M parameters)

The winners per track were determined based on the accuracy of the submitted models on a hidden test set.

The Fast-track podium :

- 1st place: Simon Rauch, Philip Zimmer

- 2nd place: Aisan Fathi, Tilo Heep, Anshul Gupta

- 3rd place: Ali Elganzory, Emmanuel Hofmann, Luis Barragan

The Large-track podium :

- 1st place: Ali Elganzory, Emmanuel Hofmann, Luis Barragan

- 2nd place: Gerrit Freiwald, Lyubomir Ivanov, Yu Tung Wang

- 3rd place: Aisan Fathi, Tilo Heep, Anshul Gupta

Congratulations!

Questions?

If you have a question, please post it in the ILIAS forum (so everyone can benefit from the answer).

Course Type: Lab Course Time & Location: 24.11.23; MST Pool, Building 74, 14:00-15:00 Introduction to Lab

27.11.23 - 30.11.23; MST Pool, Building 74, 09:00-17:30 participation in the AutoML Fall School

During the semester : A supervised projected related to AutoML;

Themes relating to ensembling and capabilities of AutoML systems

07.02.24; Room 13, Building 74, 14:00-16:00 : Poster presentation Organizers: Eddie Bergman , Lennart Purucker , Frank Hutter Web Page: HISinOne , Local Page

Note: You do not need to register for the fall school if you have registered for this course. Your participation in the fall school will be free of charge. You can register for the course via HISinOne .

Background

Applying machine learning (ML) and in particular deep learning (DL) in practice is a challenging task and requires a lot of expertise. Among other things, the success of ML/DL applications depends on many design decisions, including an appropriate preprocessing of the data, choosing a well-performing machine learning algorithm and tuning its hyperparameters, giving rise to a complex pipeline. Unfortunately, even experts need days, weeks or even months to find well-performing pipelines and can still make mistakes when optimizing their pipelines.

The lab course will start with a fall school featuring lectures on hot topics in AutoML such as "automating data science", "automated reinforcement learning" or "neural architecture search", as well as present tutorials on various topics in AutoML. After this fall school, students will take on a project themed around Ensembling or capabilities of AutoML Systems.

Requirements

We require that you have heard a lecture on

- Machine Learning, and/or

- Deep Learning

We strongly recommend that you have taken the AutoML lecture.

Organization

Students take part in the AutoML Fall School (remotely, free of charge) to hear from world leading AutoML experts about current hot topics in the field. After this week, we will meet to present potential projects from which the students are free to select which one they want to tackle. We expect that students work in groups of up to three. During the semester the students will meet with a supervisor to discuss potential issues they are facing. At the end of the semester all groups will present their work during a poster presentation.

Grading

The grades are determined based on the quality of the project part.

Important Dates

- 24.11.23; MST Pool, Building 74, 14:00-15:00 Introduction to Lab

- 27.11.23 - 30.11.23; MST Pool, Building 74, 09:00-17:30 participation in the AutoML Fall School

- 07.02.24; Room 13, Building 74, 14:00-16:00 : Poster presentation

Tabular data has long been overlooked by deep learning research, despite being the most common data type in real-world machine learning applications. While deep learning methods excel on many ML applications, tabular data classification problems are still dominated by Gradient-Boosted Decision Trees. More recently, deep learning-based approaches have been proposed which showed remarkable efficiency and performance improvements. In this seminar, we will discuss this recent literature, exploring the most promising techniques and approaches for handling tabular data in deep learning.

| Course type: | Seminar |

| Time | Every Tuesday from 14:15 - 16:00 |

| Location | in-person; Room SR 00-006, Building 051 |

| Organizers | Herilalaina Rakotoarison , Arbër Zela , Fabio Ferreira , Frank Hutter |

| Registration | Via HISinOne |

| Web page | Link to the seminar webpage |

Welcome to the Deep Learning Lab a joint teaching effort of the Robotics (R) , Robot Learning (RL) , Neurorobotics (NR) , Computer Vision (CV) , and Machine Learning (ML) Labs. For more details check the following link: https://rl.uni-freiburg.de/teaching/ss23/laboratory-deep-learning-lab

Course type: Live Lectures + (optional) Exercises Time: Tuesday 10:00 - 12:00, Friday 10:00 - 12:00 Location: HS 00 036 SCHICK - SAAL (G.-Köhler-Allee 101) Organizers: Joschka Bödecker , Tim Welschehold , Steven Adriaensen Web page: ILIAS

Course Content: This course will introduce basic concepts and techniques used within the field of Artificial Intelligence. Among other topics, we will discuss:

- Introduction to and history of Artificial Intelligence

- Agents

- Problem-solving and search

- Board Games

- Logic and knowledge representation

- Planning

- Representation of and reasoning with uncertainty

- Machine learning

- Deep Learning

Lectures will roughly follow the book: Artificial Intelligence a Modern Approach (3rd edition)

Organization : There are two lecture slots every week, on Tuesday and Friday (10:15 - 11:45). The first lecture will be on Tuesday 18.04 (see ILIAS for all appointments). During these slots, there will be live (in-person) lectures. These will be in-person only (not hybrid), but recordings will be made available on ILIAS.

Every week, we will also release an exercise sheet (on Friday) to be submitted before next Friday (8:00am). Your submissions will not be graded, but you will receive feedback from our tutors. On Friday, after the live lecture, one of our tutors will present the master solution and answer any questions you may have. The first exercise is due on 28.04. Participation in these weekly sessions and exercises is optional. The only requirement for passing the course is passing the final exam (date/format TBD).

If you have any questions, please post in the ILIAS forum or contact us ailect23@informatik.uni-freiburg.de

Course type: Flipped-classroom Lecture + Exercise Time: Monday 14:00 - 16:00 Location: SR 01-009/13 (G.-Köhler-Allee 101) Organizers: Frank Hutter , André Biedenkapp , Heri Rakotoarison ,Neeratyoy Mallik , Eddie Bergman Web page: HISinOne

First Lecture

Date : April 17, 2023.

Requirement for attending : The Overview lecture from the AutoML MOOC .

Background

Applying machine learning (ML) and in particular deep learning (DL) in practice is a challenging task and requires a lot of expertise. Among other things, the success of ML/DL applications depends on many design decisions, including an appropriate preprocessing of the data, choosing a well-performing machine learning algorithm and tuning its hyperparameters, giving rise to a complex pipeline. Unfortunately, even experts need days, weeks or even months to find well-performing pipelines and can still make mistakes when optimizing their pipelines.The course will discuss meta-algorithmic approaches to automatically search for, and obtain well-performing machine learning systems by means of automated machine learning (AutoML). Such AutoML systems allow for faster development of new ML/DL applications, require far less expert knowledge than doing everything from scratch and often even outperform human developers. In this lecture, you will learn how to use such AutoML systems, to develop your own systems and to understand ideas behind state-of-the-art AutoML approaches.

Requirements

We strongly recommend that you know the foundations of

- machine learning (ML)

- and deep learning (DL)

We further recommend that you have hands-on experience with:

- Python (3.8+)

- machine learning (scikit-learn)

- deep learning (PyTorch)

The participants should have attended at least one other course for ML and DL in the past.

Topics

The lectures are partitioned in several parts, including:

- Hyperparameter Optimization

- Bayesian Optimization for Hyperparameter Optimization

- Neural Architecture Search

- Dynamic Configuration

- Analysis and Interpretability of AutoML

- Algorithm Selection/Meta-Learning

The weekly slides and exercises are available on https://ml.informatik.uni-freiburg.de/github-classroom-automl-2023

Organization

The course will be taught in a flipped-classroom style. We will meet weekly once for a combined Q/A session and an exercise. Roughly every week, there will be a new exercise sheet. Most exercises will be practical, involve programming in python and teamwork (teams of up to 3 students!) so that you learn how to apply AutoML in practice.

Lecture/Exercise: Monday 14 - 16

The material is publicly available via the AI-Campus platform . Please register on AI-Campus to access the materials. Grading of the exercises will be done via GitHub classroom.

The links to access the GitHub classroom repositories will be made available on this page . (The password to that page will be announced in the first session).

The course will be taught in English.

The lecture materials are open sourced via https://github.com/automl-edu/AutoMLLecture

Course type: Lecture + Exercise Time: Monday, 14:15 - 15:45, first meeting: Oct. 17 Location: The course will be Hybrid:

- Weekly flipped classroom sessions will be held on Monday at HS 00 026 µ - SAAL (G.-Köhler-Allee 101) and via Zoom. See ILIAS for Zoom link.

- Optional exercise sessions will take place on Friday 10:15-11:45 at HS 00 006 (G.-Köhler-Allee 082) Organizers: Frank Hutter , Abhinav Valada , Steven Adriaensen , Mahmoud Safari Web page: ILIAS (please make sure to also register for all elements of this course module in HISinOne)

Foundations of Deep Learning

Deep learning is one of the fastest growing and most exciting fields. This course will provide you with a clear understanding of the fundamentals of deep learning including the foundations to neural network architectures and learning techniques, and everything in between.

Course Overview

The course will be taught in English and will follow a flipped classroom approach.

Every week there will be:

- a video lecture

- an exercise sheet

- a flipped classroom session (hybrid, Mondays 14:15 - 15:45)

- an attendance optional exercise session (in-class/offline, Fridays 10:15 - 11:45)

At the end, there will be a written exam (likely an ILIAS test).

Exercises must be completed in groups and must be submitted a week (+ 1 day) after their release.

Your submissions will be graded and you will receive weekly feedback.

Your final grade will be solely based on a written examination, however, a passing grade for the exercises is a prerequisite for passing the course.

Hybrid course: All material will be made available online and course participation will not require in-person presence. That being said, we offer ample opportunity for direct interaction with the professors during live Q & A sessions (HS 00 026 µ - SAAL, G.-Köhler-Allee 101) and with our tutors during weekly attendance optional in-class exercise sessions (HS 00-006, G.-Köhler-Allee 082).

Exam: The exam will likely be a test you complete on ILIAS. In-person presence may be required (TBA).

Course Schedule

The following are the dates for the flipped classroom sessions:

17.10.22 - Kickoff: Info Course Organisation / Team Formation

24.10.22 - Week 1: Intro to Deep Learning

31.10.22 - Week 2: From Logistic Regression to MLPs

07.11.22 - Week 3: Backpropagation

14.11.22 - Week 4: Optimization

21.11.22 - Week 5: Regularization

28.11.22 - Week 6: Convolutional Neural Networks (CNNs)

05.12.22 - Week 7: Recurrent Neural Networks (RNNs)

12.12.22 - Week 8: Attention & Transformers

19.12.22 - Week 9: Practical Methodology

09.01.23 - Week 10: Hyperparameter Optimization

16.01.23 - Week 11: Neural Architecture Search

23.01.23 - Week 12: Auto-Encoders, Variational Auto-Encoders, GANs

30.01.23 - Week 13: Uncertainty in Deep Learning

06.02.23 - Round-up / Exam Q & A

The course material (lecture video, slides, exercise sheet) for "Week N" will be made available a week before the flipped classroom session for "Week N". For example, the material for Week 1 will be available on 17.10.22 and solutions to the exercises must be submitted latest 25.10.22 at 23:59. Virtual participation in flipped classroom sessions will be enabled using Zoom and the meeting link can be found on ILIAS in the "Flipped Classroom" folder.

In the first session (on 17.10.22) you will get additional information about the course and get the opportunity to ask general questions (and form groups!) While there is no need to prepare for this first session, we encourage you to already think about forming teams.

The last flipped classroom session will be held on 06.02.23.

Competition Results

Flower Classification Challenge

This semester, we organized an optional student competition. In this challenge, students were to train a model to perform class prediction on a flower dataset (more info here ).

There were two tracks:

- Fast-track (models with less than 100k parameters)

- Large-track (models with less than 25M parameters)

The winners per track were determined based on the accuracy of the submitted models on a hidden test set.

The Fast-track podium:

- 1st place: Jelle Dehn, Soham Basu, Laura Neschen

- 2nd place: Adithya Anoop Thoniparambil, Daniel Rogalla

- 3rd place: Dominika Matus, M'Saydez Campbell, Florian Vogt

The Large-track podium:

- 1st place: Muhammad Ali

- 2nd place: Jelle Dehn, Soham Basu, Laura Neschen

- 3rd place: Premnath Srinivasan, Rishabh Verma, Ali Sarlak

Congratulations!

Tree Segmentation Challenge

After the success of the flower classification challenge, we organized a second optional student competition, spanning two semesters. In this challenge, students were to train a model to perform semantic segmentation on a tree dataset (more info here ).

The podium:

- 1st place: Rishabh Verma, Premnath Srinivasan

- 2nd place: Ali Sarlak, Elham Elyasi

- 3rd place: Noah Lenagan

Congratulations!

Questions?

If you have a question, please post it in the ILIAS forum (so everyone can benefit from the answer).

Alternatively, you can also email dl-orga-ws22@cs.uni-freiburg.de

Course Type: Lab Course Time & Location: 10.10.22 - 13.10.22 participation in the AutoML Fall School

19.10.22; 14:00-16:00; 082 HS 00 006 Introduction to Projects

During the semester : Implement your own AutoML system

TBA : Poster presentation Organizers: André Biedenkapp , Rhea Sukthanker , Frank Hutter Web Page: HISinOne , Local Page

Note: You do not need to register for the fall school if you have registered for this course. Your participation in the fall school will be free of charge. You can register for the course via HISinOne .

Background

Applying machine learning (ML) and in particular deep learning (DL) in practice is a challenging task and requires a lot of expertise. Among other things, the success of ML/DL applications depends on many design decisions, including an appropriate preprocessing of the data, choosing a well-performing machine learning algorithm and tuning its hyperparameters, giving rise to a complex pipeline. Unfortunately, even experts need days, weeks or even months to find well-performing pipelines and can still make mistakes when optimizing their pipelines. The lab course will start with a fall school featuring lectures on hot topics in AutoML such as "automating data science", "automated reinforcement learning" or "neural architecture search", as well as present tutorials on various topics in AutoML. After this fall school, students are tasked with implementing their own AutoML system for a particular task or problem domain.

Requirements

We require that you have heard a lecture on

- Machine Learning, and/or

- Deep Learning

We strongly recommend that you have taken the AutoML lecture.

Organization

This lab course officially starts one week before the start of the lecture period. During that week, students take part in the AutoML fall school , to be held in Freiburg, to hear from world leading AutoML experts about current hot topics in the field. After this week, we will meet to present potential projects from which the students are free to select which one they want to tackle. We expect that students work in groups of up to three to implement their AutoML system. During the semester the students will meet with a supervisor to discuss potential issues they are facing when implementing their system. At the end of the semester all groups will present their work during a poster presentation.

Grading

The grades are determined based on the quality of the project part.

Important Dates

- 10.10.22 - 13.10.22: AutoML Fall School

- 19.10.22 14:00 - 16:00: Introduction to the topics In: HS 00 006 (G.-Köhler-Allee 082)

- TBD: Poster Session

Kickoff Slides

Course type: Seminar Time: Tuesdays 14:00 - 16:00 Location: G.-Köhler-Allee 051, R 03 026 Organizers: André Biedenkapp , Noor Awad , Frank Hutter Web page: HISinOne , Local Page

You can register for the seminar via HISinOne .

Background

Hyperparameter optimization is a powerful approach to achieve the best performance on many different problems. However, automated approaches to solve this problem tend to ignore the iterative nature of many algorithms. With the dynamic algorithm configuration (DAC) framework we can generalize over prior optimization approaches, as well as handle optimization of hyperparameters that need to be adjusted over multiple time-steps. In this seminar, we will discuss applications (such as temporally extended epsilon greedy exploration in RL ) and domains (e.g., reinforcement learning , evolutionary algorithms or deep learning ) that can benefit from dynamic configuration methods. A large portion of the seminar will be dedicated to discussing papers that describe DAC methods that employ reinforcement learning to learn hyperparameter optimization policies for various domains.

Requirements

We require that you have taken lectures on

- Machine Learning, and/or

- Deep Learning

We strongly recommend that you have heard lectures on

- Automated Machine Learning

- Reinforcement Learning

Organization

Every week all students read the relevant literature. Two students will prepare presentations for the topics of the week and present it in the session. After each presentation, we will have time for a question & discussion round and all participants are expected to take part in these. Each student has to write a short paper about their assigned topic which is to be handed in one week after their presentation.

Grading

- Presentation: 40% (20min + 20min Q&A)

- Paper: 40% (4 pages in AutoML Conf format , due one week after your presentation)

- Participation in Discussions: 20%

Schedule

Welcome to the Deep Learning Lab a joint teaching effort of the Robotics (R) , Robot Learning (RL) , Neurorobotics (NR) , Computer Vision (CV) , and Machine Learning (ML) Labs. For more details check the following link: https://rl.uni-freiburg.de/teaching/ss22/laboratory-deep-learning-lab

Fair and Interpretable Machine Learning

| Course type: | Seminar |

| Time: | Wednesday 16:00 - 18:00 |

| Location: | G.-Köhler-Allee 051, SR 00 034 |

| Organizers: | Janek Thomas , Noor Awad |

| Web page: | HisInOne |

Seminar on Fair and Interpretable Machine Learning

The seminar language will be English (even if everyone is German-spoken, to practice presentation skills in English).

*First meeting:

- 27th April, 16:00-18:00, SR 00 034 (G.-Köhler-Allee 051).

*Regular meetings:

- Every Wednesday, 16:00-18:00, SR 00 034 (G.-Köhler-Allee 051).

Background

The seminar focuses on interpretable machine learning in the first half of the semester and fair machine learning in the second half.

We will discuss model-agnostic tools for fairness and interpretability as well as specific algorithms. Further topics include measuring fairness, a causal perspective on fairness and how to consider fairness in AutoML.

Organization

Each week: All Students read relevant literature. Three students prepare the topic with slides and applications. Three other students are assigned as discussants. Discussants have to meet with the group presenting prior to the session, give feedback and prepare critical discussion points and open questions.

End of the semester: Each student has to write a short paper (10 pages) about their topic.

Requirements

We strongly recommend that you know the foundations of

- Machine Learning

- For some topics: Deep Learning

Main Literature

[1] Molnar, Christoph. Interpretable machine learning. Lulu. com, 2020. - https://christophm.github.io/interpretable-ml-book/

[2] Mehrabi, Ninareh, et al. "A survey on bias and fairness in machine learning." ACM Computing Surveys (CSUR) 54.6 (2021): 1-35.

[3] Barocas, Solon, Moritz Hardt, and Arvind Narayanan. "Fairness in machine learning." Nips tutorial 1 (2017): 2.

Schedule

| Date | Topic | Main Ref. | Further Refs. |

| 27.04.2022 | Introduction, groups and topic assignments | - | |

| 04.05.2022 | - | ||

| 11.05.2022 | - | [1] Chapter 1-3 | |

| 18.05.2022 | Interpretable Machine Learning Methods | [1] Chapter 5 | Friedman, Jerome H., and Bogdan E. Popescu. "Predictive learning via rule ensembles." The annals of applied statistics 2.3 (2008): 916-954. |

| Lou, Yin, et al. "Accurate intelligible models with pairwise interactions." Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining. 2013. | |||

| Hofner, Benjamin, et al. "A framework for unbiased model selection based on boosting." Journal of Computational and Graphical Statistics 20.4 (2011): 956-971. | |||

| 25.05.2022 | Global Model-Agnostic Interpretability Methods | [1] Chapter 8 | Apley, Daniel W., and Jingyu Zhu. “Visualizing the effects of predictor variables in black box supervised learning models.” Journal of the Royal Statistical Society: Series B (Statistical Methodology) 82.4 (2020): 1059-1086. |

| Wei, Pengfei, Zhenzhou Lu, and Jingwen Song. "Variable importance analysis: a comprehensive review." Reliability Engineering & System Safety 142 (2015): 399-432. | |||

| Kim, Been, Rajiv Khanna, and Oluwasanmi O. Koyejo. "Examples are not enough, learn to criticize! criticism for interpretability." Advances in neural information processing systems 29 (2016). | |||

| 01.06.2022 | Local Model-Agnostic Interpretability Methods | [1] Chapter 9 | Goldstein, Alex, et al. "Peeking inside the black box: Visualizing statistical learning with plots of individual conditional expectation." journal of Computational and Graphical Statistics 24.1 (2015): 44-65. |

| Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "" Why should I trust you?" Explaining the predictions of any classifier." Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. 2016. | |||

| Karimi, Amir-Hossein, et al. "Model-agnostic counterfactual explanations for consequential decisions." International Conference on Artificial Intelligence and Statistics. PMLR, 2020. | |||

| 08.06.2022 | - | [3] Chapter 1 | |

| 15.06.2022 | Interpretability Methods for Neural Networks | [1] Chapter 10 | Zeiler, Matthew D., and Rob Fergus. "Visualizing and understanding convolutional networks." European conference on computer vision. Springer, Cham, 2014. |

| Kim, Been, et al. "Interpretability beyond feature attribution: Quantitative testing with concept activation vectors (tcav)." International conference on machine learning. PMLR, 2018. | |||

| Jain, Sarthak, and Byron C. Wallace. "Attention is not explanation." arXiv preprint arXiv:1902.10186 (2019). | |||

| 22.06.2022 | Multi-Objective and constrained Optimization and Model Selection | TBD | Deb, Kalyanmoy, et al. "A fast and elitist multiobjective genetic algorithm: NSGA-II." IEEE transactions on evolutionary computation 6.2 (2002): 182-197. |

| Knowles, Joshua. "ParEGO: A hybrid algorithm with on-line landscape approximation for expensive multiobjective optimization problems." IEEE Transactions on Evolutionary Computation 10.1 (2006): 50-66. | |||

| Gardner, Jacob R., et al. "Bayesian Optimization with Inequality Constraints." ICML. Vol. 2014. 2014. | |||

| 29.06.2022 | Measures for Fairness | [2] Section 4.1 | Hardt, Moritz, Eric Price, and Nati Srebro. "Equality of opportunity in supervised learning." Advances in neural information processing systems 29 (2016). |

| Kearns, Michael, et al. "An empirical study of rich subgroup fairness for machine learning." Proceedings of the conference on fairness, accountability, and transparency. 2019. | |||

| Chouldechova, Alexandra. "Fair prediction with disparate impact: A study of bias in recidivism prediction instruments." Big data 5.2 (2017): 153-163. | |||

| 06.07.2022 | Debiasing Methods | [2] Section 5.1 | Mehrabi, Ninareh, et al. "Debiasing community detection: The importance of lowly connected nodes." 2019 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM). IEEE, 2019. |

| Calmon, Flavio, et al. "Optimized pre-processing for discrimination prevention." Advances in neural information processing systems 30 (2017). | |||

| Kamiran, Faisal, and Toon Calders. "Data preprocessing techniques for classification without discrimination." Knowle... |

Course type: Flipped-classroom Lecture + Exercise Time: Wednesday 12:00 - 14:00 Location: HS 00 036 SCHICK - SAAL (G.-Köhler-Allee 101) Organizer: Janek Thomas , André Biedenkapp , Edward Bergman , Neeratyoy Mallik , Frank Hutter Web page: Link to Lecture Page

Background

Applying machine learning (ML) and in particular deep learning (DL) in practice is a challenging task and requires a lot of expertise. Among other things, the success of ML/DL applications depends on many design decisions, including an appropriate preprocessing of the data, choosing a well-performing machine learning algorithm and tuning its hyperparameters, giving rise to a complex pipeline. Unfortunately, even experts need days, weeks or even months to find well-performing pipelines and can still make mistakes when optimizing their pipelines.The course will discuss meta-algorithmic approaches to automatically search for, and obtain well-performing machine learning systems by means of automated machine learning (AutoML). Such AutoML systems allow for faster development of new ML/DL applications, require far less expert knowledge than doing everything from scratch and often even outperform human developers. In this lecture, you will learn how to use such AutoML systems, to develop your own systems and to understand ideas behind state-of-the-art AutoML approaches.

Requirements

We strongly recommend that you know the foundations of

- machine learning (ML)

- and deep learning (DL)

We further recommend that you have hands-on experience with:

- Python (3.6+)

- machine learning

- deep learning

The participants should have attended at least one other course for ML and DL in the past.

Topics

The lectures are partitioned in several parts, including:

- Hyperparameter Optimization

- Bayesian Optimization for Hyperparameter Optimization

- Neural Architecture Search

- Dynamic Configuration

- Analysis and Interpretability of AutoML

- Algorithm Selection/Meta-Learning

Organization

The course will be taught in a flipped-classroom style. We will meet weekly once for a combined Q/A session and an exercise. Roughly every week, there will be a new exercise sheet. Most exercises will be practical, involve programming in python and teamwork (teams of up to 3 students!) so that you learn how to apply AutoML in practice.

Lecture/Exercise: Wednesday 12:00 - 14:00

The material is publicly available via the AI-Campus platform . Please register on AI-Campus to access the materials. Grading of the exercises will be done via GitHub classroom. The links to access the GitHub classroom repositories are available here . (The password to that page will be announced in the first session). The course will be taught in English.

Exam

Student conference:

“Students groups of three write a short report on one of three potential topics, peer-review the reports and present their work in a virtual poster session.”

- Report: 4 pages + #students pages appendix

- Contributions of each student needs to be described for the report

- Paper template will be provided

- Will be organized on openreview.net

- Peer review:

- Each student has to write a review for one other paper

- We will provide some reviewing guidelines

- Poster Conference:

- Jointly with Hannover

- Online in gather.town

- Oral presentation of your poster during a predefined time-slot

- 20.07.2022: Project handout

- 31.08.2022: Report Deadline

- 09.09.2022: Review Deadline

- 13.09.2022 (16:00 CEST): Poster Submission Deadline (a template is available here )

- 15 - 16.09.2022: Poster Session

- 23.09.2022: Final Deadline (incorporate feedback from the poster session)

Conference Schedule

| Day | Time Slot | Poster Room | Paper IDs |

| Thursday 15.09.22 | 09:00 - 10:45 | Room 1 | 9, 16, 20, 24, 25 |

| Thursday 15.09.22 | 11:00 - 12:30 | Room 2 | 6, 7, 11, 12, 27 |

| Thursday 15.09.22 | 13:30 - 15:30 | Room 3 | 2, 13, 14, 15, 28, 30 |

| Friday 16.09.22 | 09:00 - 11:30 | Room 1 | 1, 3, 4, 5, 17, 18, 19 |

Please make sure to be at your poster during the time-slot above. While it is not your turn to present a poster, you can visit the other posters and discuss the approaches with the presenters. You are expected to take part in the whole conference. If you're unsure of your paper ID, you can check on openreview.

| Course type | Oberseminar: Reading Group (no ECTS) -- Automated Machine Learning |

| Time: | Wednesdays, 13:00 |

| Web page: |

Reading Group |

| Course type: | Oberseminar: Reading Group (no ECTS) -- Deep Learning and Hyperparameter Optimization |

| Time: | Wednesdays, 16:00 |

| Location: | online |

| Contact: | Samuel Müller , |

| Course type: | B.Sc./M.Sc. projects |

| Kickoff meeting: | TBA |

| Location: | online only |

| Web page: |

Open Projects |

Update Oct. 14: We added details about the seminar mode.

Update Oct. 8: Title and time of the seminar changed & added the link to HISinOne.

| Course type: | Seminar |

| Time: | Tuesday, 10:00 - 11:30 am, first meeting: Oct. 19 |

| Location: | The course will be fully virtual/online. See ILIAS for Zoom link. |

| Topic & Mode: | In this year's seminar, we will discuss papers about Self-Supervised Learning with strong focus on computer vision. The details about the seminar mode will be discussed in the kick-off meeting on Oct 19th. We will begin our seminar with a background video that we discuss together on Oct 2nd. For the following sessions students are asked to: 1) present a paper, 2) lead the moderation on the paper, 3) actively participate in the discussion of other paper presentations. We will try to assign the papers based on your preference but there's no guarantee you will receive the paper you requested. |

| Organizer: | Frank Hutter , Fabio Ferreira , Samuel Müller , Robin Schirrmeister |

| Web page: | ILIAS , HISInOne |

| Course type: | Lecture + Exercise |

| Time: | Wednesday, 12:15 - 13:45, first meeting: Oct. 20 |

| Location: |

The course will be fully virtual/online. Weekly flipped classroom sessions will be held on Zoom. See ILIAS for Zoom link. |

| Organizers: | Frank Hutter , Abhinav Valada , Steven Adriaensen , Samuel Müller , Yash Mehta , Niclas Vödisch |

| Web page: | ILIAS (please also register for all elements of this course module in HISinOne ) |

Foundations of Deep Learning

Deep learning is one of the fastest growing and most exciting fields. This course will provide you with a clear understanding of the fundamentals of deep learning including the foundations to neural network architectures and learning techniques, and everything in between.Course Overview

The course will be taught in English and will follow a flipped classroom approach. Every week there will be:- a video lecture

- an exercise sheet

- a flipped classroom session (virtual/online, Wednesdays 12:15 - 13:45)

- an attendance optional exercise session (in-class/offline, Thursdays 10:15 - 11:45)

At the end, there will be a written exam (likely ILIAS test).

Exercises must be completed in groups and must be submitted a week (+ 1 day) after their release. Your submissions will be graded and you will receive weekly feedback. Your final grade will be solely based on a written examination, however a passing grade for the exercises is a prerequisite for passing the course.

Online course: All material will be made available online and course participation will not require in-person presence. That being said, we offer the opportunity for direct interaction with our tutors during weekly attendance optional in-class exercise sessions (building 82, HS 00-006). In addition, it is possible to attend the digital flipped classroom sessions on campus using your own laptop + headphones (Building 101 - HS 00 036).

Exam: The exam will likely be a test you complete on ILIAS. In person presence may be required (tba).

Course Schedule

The following are the dates for the flipped classroom sessions:20.10.21 - Kickoff: Info Course Organisation / Team Formation

27.10.21 - Week 1: Overview of Deep Learning

03.11.21 - Week 2: From Logistic Regression to MLPs

10.11.21 - Week 3: Backpropagation

17.11.21 - Week 4: Optimization

24.11.21 - Week 5: Regularization

01.12.21 - Week 6: Convolutional Neural Networks (CNNs)

08.12.21 - Week 7: Recurrent Neural Networks (RNNs)

15.12.21 - Week 8: Practical Methodology & Architectures

22.12.21 - Week 9: Hyperparameter Optimization

12.01.22 - Week 10: Neural Architecture Search

19.01.22 - Week 11: Attention & Transformers

26.01.22 - Week 12: Auto-Encoders, Variational Auto-Encoders, GANs

02.02.22 - Week 13: Uncertainty in Deep Learning

09.02.22 - Round-up / Exam Q & A

The course material (lecture video, slides, exercise sheet) for "Week N" will be made available a week before the flipped classroom session for "Week N". For example, the material for Week 1 will be available on 20.10.21 and solutions to the exercises must be submitted latest 28.10.21 at 23:59. We will be using Zoom and the meeting link can be found on ILIAS in the "Flipped Classroom" folder.

In the first session (on 20.10.21) you will get additional information about the course and get the opportunity to ask general questions (and form groups!) While there is no need to prepare for this first session, we encourage you to already think about forming teams. The last flipped classroom session is held on 09.02.22.

Competition Results

This semester, we organised an optional student competition. In this challenge, students were to train a model to perform class prediction on a flower dataset (more info here ).There were two tracks:

- Fast-track (models with less than 100k parameters)

- Large-track (models with less than 25M parameters)

The winners per track were determined based on the accuracy of the submitted models on a hidden test set.

The Fast-track podium:

- 1st place: Nisarga Nilavadi Chandregowda, Pablo Marhoff, Tidiane Ndir (accuracy: 90.49%)

- 2nd place: Bijay Gurung, Caoting Li, Kartik Yadav (accuracy: 82.80%)

- 3rd place: Uygar Akkoc, Aron Bahram, Samir Garibov (accuracy: 73.12%)

The Large-track podium:

- 1st place: Paweł Bugyi, Abhijeet Nayak, Preethi Sivasankaran (accuracy: 95.15%)

- 2nd place: Akshay Chandra Lagandula, Sai Prasanna Raman, John Robertson (accuracy: 92.49%)

- 3rd place: Bijay Gurung, Caoting Li, Kartik Yadav (accuracy: 91.28%)

Congratulations!

Questions?

If you have a question, please post it in the ILIAS forum (so everyone can benefit from the answer).Alternatively, you can also email dl-orga-ws21@cs.uni-freiburg.de

| Course type: | B.Sc./M.Sc. projects |

| Kickoff meeting: | TBA |

| Location: | online only |

| Web page: |

Open Projects |

| Course type: | Lab Course |

| Time: | Tuesday, 14:00-16:00 (Beginning Apr 20, 2021) |

| Location: | online only |

| Organizer: | Frank Hutter , Fabio Ferreira , Jörg Franke , Arbër Zela , Samuel Müller , Yash Mehta |

| Web page: | Website , HISinOne |

| Course type: | Flipped-classroom Lecture + Exercise |

| Time: | Wednesday 14:00 - 16:00 |

| Location: | online only |

| Organizer: | Frank Hutter André Biedenkapp , Gresa Shala |

| Web page: |

AutoML |

| Course type: | Oberseminar: Reading Group (no ECTS) -- Deep Learning and Hyperparameter Optimization |

| Time: | Wednesdays, 16:00 |

| Location: | online |

| Contact: | Samuel Müller , |

| Course type | Oberseminar: Reading Group (no ECTS) -- Automated Machine Learning |

| Time: | Wednesdays, 13:00 |

| Web page: |

Reading Group |

| Course type: | B.Sc./M.Sc. projects |

| Location: | Kitchen, Building 074 |

| Web page: | Open Projects |

| Course type: | Seminar |

| Time: | Wednesday, 12:30 - 2pm, first meeting: Nov. 4 |

| Location: |

The seminar will be fully virtual/online and held on Zoom. See ILIAS for Zoom link. |

| Organizer: | Frank Hutter , Noor Awad , Fabio Ferreira |

| Web page: | ILIAS , HISinOne |

Organizational

- we will meet every Wednesday, 12:30-2pm, starting Nov 4

- the seminar will be fully virtual and carried out with Zoom

- we will announce the Zoom meeting link on ILIAS

- link to ILIAS course

- link to HisInOne course

- list of papers released at end of the kick-off meeting

General

Welcome to the Automated Machine Learning Seminar webpage. The seminar language will be in English and it will be comprises of papers from three areas: learning to learn (L2L), hyperparameter optimization (HPO), and neural architecture search (NAS). We will start on Wednesday, Nov. 4 at 12:30-2pm on Zoom. Check out the ILIAS course (link above) for the Zoom link. The papers discussed in this seminar will be announced at the end of the kick-off meeting. We will assume all participants have understood the fundamental concepts presented in the AutoML course .Procedure