Research Topics & Interests

Fundamental HPO

We explore core research topics in hyperparameter optimization, including black-box optimization with Bayesian Optimization, known for its sample efficiency and flexibility, user priors, which integrate human knowledge to guide optimization processes. Additionally, we investigate learning curve extrapolation and multi-fidelity optimization approaches to accelerate optimization and enable more efficient resource allocation.

HPO for large-scale Deep Learning

We aim to enable Hyperparameter Optimization for large-scale deep learning by dramatically reducing the number of full model trainings required for HPO. Our approach includes resource-efficient methods like forecasting performance from early training statistics (e.g., initial learning curve), inferring hyperparameter-aware scaling laws to predict large model behavior from smaller ones, and employing expert knowledge and meta-learning to improve sample efficiency.

Benchmarks & Tools

We develop a range of high-quality benchmarks and open-source tools to advance hyperparameter optimization research. These tools are designed to make our research accessible and practical for non-experts. Additionally, our benchmarks provide a robust framework for comparison and evaluation across HPO studies, promoting scientific rigor and facilitating researchers’ work. Widely adopted in the HPO community, our tools and benchmarks remain a standard in the field.

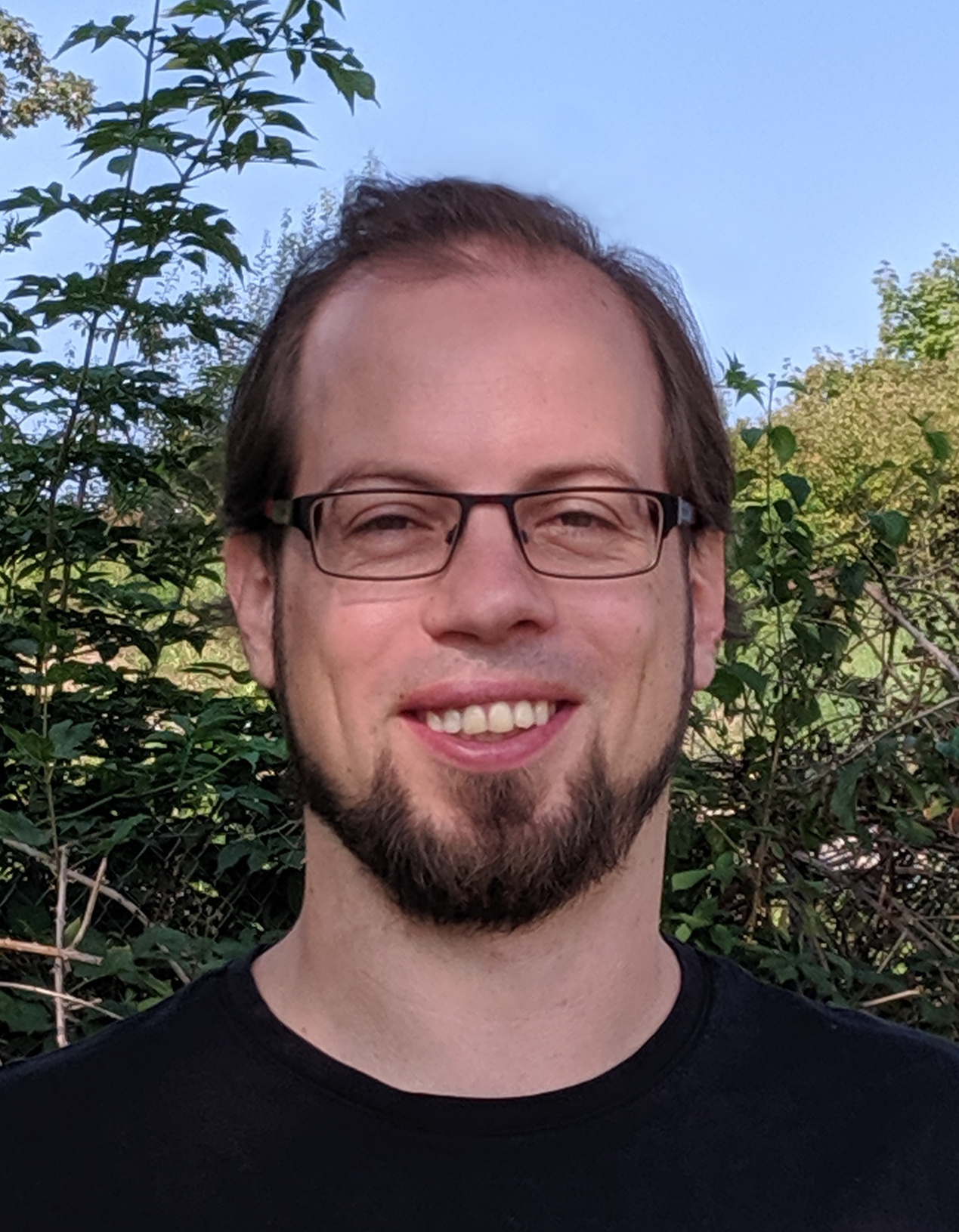

Staff Members

Students

Theodoros Athanasiadis

Soham Basu

Samir Garibov

Alumni

Anton Merlin Geburek

Shuhei Watanabe

Publications

2025 |

Frozen Layers: Memory-efficient Many-fidelity Hyperparameter Optimization Inproceedings In: Proceedings of the Fourth International Conference on Automated Machine Learning (AutoML 2025), Main Track, 2025. |

2024 |

Warmstarting for Scaling Language Models Inproceedings In: NeurIPS 2024 Workshop Adaptive Foundation Models, 2024. |

LMEMs for post-hoc analysis of HPO Benchmarking Inproceedings In: Proceedings of the Third International Conference on Automated Machine Learning (AutoML 2024), Workshop Track, 2024. |

Fast Benchmarking of Asynchronous Multi-Fidelity Optimization on Zero-Cost Benchmarks Inproceedings In: Proceedings of the Third International Conference on Automated Machine Learning (AutoML 2024), ABCD Track, 2024. |

In-Context Freeze-Thaw Bayesian Optimization for Hyperparameter Optimization Inproceedings In: Proceedings of the 41st International Conference on Machine Learning (ICML), 2024. |

2023 |

PriorBand: Practical Hyperparameter Optimization in the Age of Deep Learning Inproceedings In: Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS), 2023. |

Efficient Bayesian Learning Curve Extrapolation using Prior-Data Fitted Networks Inproceedings In: Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS), 2023. |

2022 |

Automated Dynamic Algorithm Configuration Journal Article In: Journal of Artificial Intelligence Research (JAIR), vol. 75, pp. 1633-1699, 2022. |

2021 |

HPOBench: A Collection of Reproducible Multi-Fidelity Benchmark Problems for HPO Inproceedings In: Vanschoren, J.; Yeung, S. (Ed.): Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks, 2021. |

DEHB: Evolutionary Hyberband for Scalable, Robust and Efficient Hyperparameter Optimization Inproceedings In: Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence (IJCAI'21), ijcai.org, 2021. |